The "Research Translator" Prompt

How a 5.5K Account Turned One Paper Into 760K Impressions

Someone with 5,500 followers just outperformed accounts 20x their size.

One post about an NVIDIA research paper. 760,000+ impressions. 2,000 new followers. 37% audience growth from ONE post.

And no, he didn’t “get lucky with the algorithm.”

Most technical professionals share research papers like they’re filing a tax report. Here’s the abstract. Here’s the link. Here’s a bullet point summary that reads like a sleeping pill.

12 likes. 3 comments from bots. Dead by lunch.

Meanwhile, the pros are doing something different. They’re not summarizing papers. They’re translating insights into career decisions.

I call it the “Research Translator” prompt, and it’s the difference between being a paper-summarizer and becoming the person everyone follows for industry insight.

🎁 Here’s What You’ll Get Today (free):

The 4 psychological triggers that make research content go viral (and why most “paper summaries” fail)

The 7-part anatomy of the perfect research translator post (steal this exact structure)

💎 Paid Subscribers Also Get:

The 5-step process to build your own research translator content (with time estimates)

4 advanced variations to multiply your output: the “Myth Buster”, the “Career Signal”, the “Cost Killer”, the “Trend Confirmation”

The 4 classic mistakes that kill research posts (and how to avoid them)The copy-paste “Research Translator” prompt that turns any dense paper into career-relevant viral content in 3 minutes

🔪 The Brutal TL;DR:

The Mistake: Sharing papers like a librarian (here’s the abstract, here’s the link)

The Play: Translate research into career and business implications your audience cares about

The Psychology: People don’t share papers. They share opinions that make them look smart.

The Result: Your research posts become the reference point for industry conversations

Let me show you exactly how this works.

🧠 The Psychology Behind Research Translator Posts

Trigger 1: The “First Mover” Effect

When someone shares new research with a strong opinion, they become the reference point for everyone else’s conversation.

The post doesn’t just inform. It frames the debate.

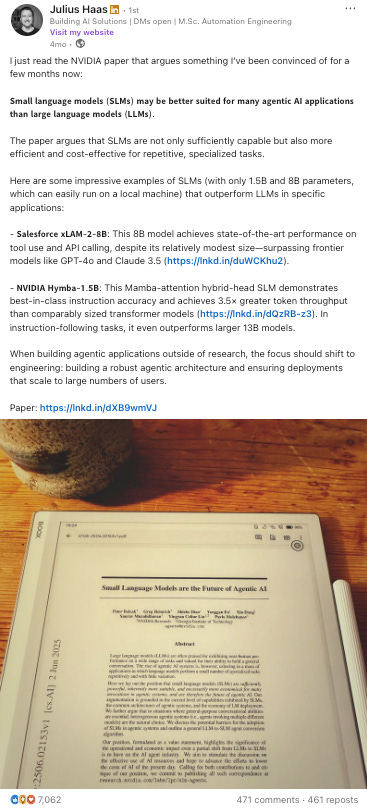

Notice how this post doesn’t say “Here’s an interesting paper.” It says “SLMs may be better suited for many agentic AI applications than LLMs.” That’s a position. That’s a flag planted.

Everyone who discusses this topic afterward will either agree or disagree with that framing. Either way, the original poster wins. They set the terms.

Why it works:

“I’ve been saying this for months” = “I’m ahead of the curve too”

“Interesting but I disagree because...” = “I’m smart enough to challenge this”

Every comment is a status signal. Your post becomes the stage.

Trigger 2: The “Career Anxiety” Lever

Technical professionals are constantly worried: Am I learning the right things? Is my stack becoming obsolete? Am I missing something important?

This post hits that nerve directly: “When building agentic applications outside of research, the focus should shift to engineering.”

That’s not just information. That’s career guidance disguised as research summary.

The reader immediately asks themselves: “Am I too focused on model size? Should I be paying more attention to SLMs? Is my current approach wrong?”

Why it works:

“Am I too focused on model size?” = self-doubt activated

“Should I be paying more attention to SLMs?” = FOMO triggered

“Is my current approach wrong?” = urgency to act

They didn’t ask themselves these questions before reading. Now they can’t stop.

Trigger 3: The “Underdog Validation” Effect

There’s something deeply satisfying about seeing the conventional wisdom get challenged.

Big model = better? Actually, no. Small models are beating GPT-4o and Claude 3.5 at specific tasks.

This isn’t just interesting. It’s emotionally satisfying for anyone who’s ever felt intimidated by the “bigger is better” hype. It validates a suspicion many had but couldn’t prove.

Why it spreads:

“Finally someone said it” = tribal belonging activated

“Sharing this with my team” = social currency earned

“I always thought SLMs were underrated” = See? I was right

Trigger 4: The “Actionable Intelligence” Signal

Most research summaries end with “interesting implications.” This post ends with a business directive: “The focus should shift to engineering: building robust agentic architecture.”

That’s not analysis. That’s a to-do list for engineering teams.

It transforms passive reading into potential action. The reader doesn’t just learn something — they now have something to discuss in their next team meeting.

Why it works:

“Sent this to my CTO” = makes them look valuable

“Saving this for our architecture review” = immediate utility

“This confirms what we should prioritize” = decision support

Interesting gets a like. Useful gets saved, shared, and remembered.

🧱 Anatomy of the Perfect Research Translator Post

Let’s break down exactly why this post generated 760K impressions.

Part 1: The Personal Conviction Opening

I just read the NVIDIA paper that argues something I’ve been convinced of for a few months now:Most people introduce research like they’re sharing someone else’s idea. This opens like a thought leader who just got backup.

Why it works:

“I’ve been convinced of for a few months now” — establishes the author as ahead of the curve

“argues something” — frames the paper as supporting their position, not the other way around

Creates authority without arrogance: “I thought this first, and now NVIDIA agrees”

Part 2: The Bold Claim

Small language models (SLMs) may be better suited for many agentic AI applications than large language models (LLMs).This is the heartbeat of the entire post. One sentence that flips the industry’s default assumption.

Why it works:

Bold enough to be interesting, specific enough to be testable (agentic applications, not “everything”)

Uses “may be” — authoritative enough to claim, safe enough to defend

Creates an immediate internal debate: “Wait... is that true?”

Most people bury the insight in paragraph three. This post leads with it. That’s why it stops the scroll.

Part 3: The Benefit-First Framing

The paper argues that SLMs are not only sufficiently capable but also more efficient and cost-effective for repetitive, specialized tasks.This is where academic becomes actionable. The author doesn’t explain what SLMs are — they explain why you should care.

Why it works:

Focuses on practical benefits, not technical capabilities

Translates academic language into business language: “efficient and cost-effective”

“Repetitive, specialized tasks” — three words that make engineers question their architecture

Part 4: The Proof Stack

Here are some impressive examples of SLMs (with only 1.5B and 8B parameters, which can easily run on a local machine) that outperform LLMs in specific applications:

- 𝗦𝗮𝗹𝗲𝘀𝗳𝗼𝗿𝗰𝗲 𝘅𝗟𝗔𝗠-𝟮-𝟴𝗕: This 8B model achieves state-of-the-art performance on tool use and API calling...

- 𝗡𝗩𝗜𝗗𝗜𝗔 𝗛𝘆𝗺𝗯𝗮-𝟭.𝟱𝗕: This Mamba-attention hybrid-head SLM demonstrates best-in-class instruction accuracy...Claims without evidence get scrolled past. Claims with specific models, numbers, and benchmarks get saved.

Why it works:

Specific model names and parameter counts = instant credibility (most won’t, but knowing they could build trust)

“Can easily run on a local machine” — makes it personally relevant

“Outperform LLMs” — triggers curiosity about how that’s possible

Part 5: The Competitive Context

surpassing frontier models like GPT-4o and Claude 3.5Six words that make the post shareable. You’re not just claiming small models work — you’re claiming they beat the best.

Why it works:

Name-drops the titans everyone knows

Creates a David vs. Goliath narrative people love to spread

“Frontier models” signals you speak the industry language

Nobody shares “SLMs are pretty good.” They share “SLMs are beating GPT-4o.”

Part 6: The Business Directive

When building agentic applications outside of research, the focus should shift to engineering: building a robust agentic architecture and ensuring deployments that scale to large numbers of users.This is where most research posts die. They inform but don’t direct. This one tells you exactly what to prioritize.

Why it works:

Transitions from “here’s what the paper says” to “here’s what you should do”

Specific guidance: focus on architecture, not model size

Addresses the actual concern of engineering leaders making decisions right now

Research without a directive is interesting. Research with a directive is useful.

Part 7: The Resource Stack

Paper: https://...The simplest part, but don’t skip it. The link transforms your opinion into verifiable insight.

Why it works:

Provides the source for instant credibility

Enables readers to go deeper (most won’t, but they like knowing they could)

Signals transparency: “I’m not making this up, here’s the receipts”

Link = trust. No link = “just another hot take.”

The Secret Sauce:

Most post just inform.

This one says ‘here’s what it means and what to do about it.’

That’s the difference between 12 likes and 760K impressions.